Deep Dream image synthesis & Deep Style Transfer.

Deep dream synthesis & deep style transfer use artificial intelligence that combines artificial and human intelligence to generate a unique composite of two images. The underlying technology was created by google and released to the public as open source software in 2015. Now there are a handful of pieces of software & mobile apps available, as well as a number of websites that handle much of the fine tweaking and work for you.

This emerging form of artistic expression has grown a culture of people who are obscuring the boundaries between creativity & technology.

BUT, is this real art or a new way to allow everyone to be creative?

My answer is: yes. Yes to both.

STYLING Something THAT is Perfect and ABSOLUTELY DOES NOT NEED my Meddling

The process of making deep style images starts with a human person selecting or creating a source image or photo to be run through the artificial intelligence software along with a style/texture image for infusion into the source image. The software looks deep into the folds and textures of both images and decides where in the source image the infusions will be made along with how deep those enhancements go.

Let's see what happens when a masterpiece painting is influenced by a technological style image.

Evolution of a deep style processed Masterpiece

SOURCE / ORIGINAL ART

For this example we'll jump into the very first image from the slideshow above.

I've taken one of my favorites pieces of artwork by the painter, Malcolm Liepke (an incredible artist), to which I wanted to apply a texture image that would infuse the source with a cyberpunk vibe.

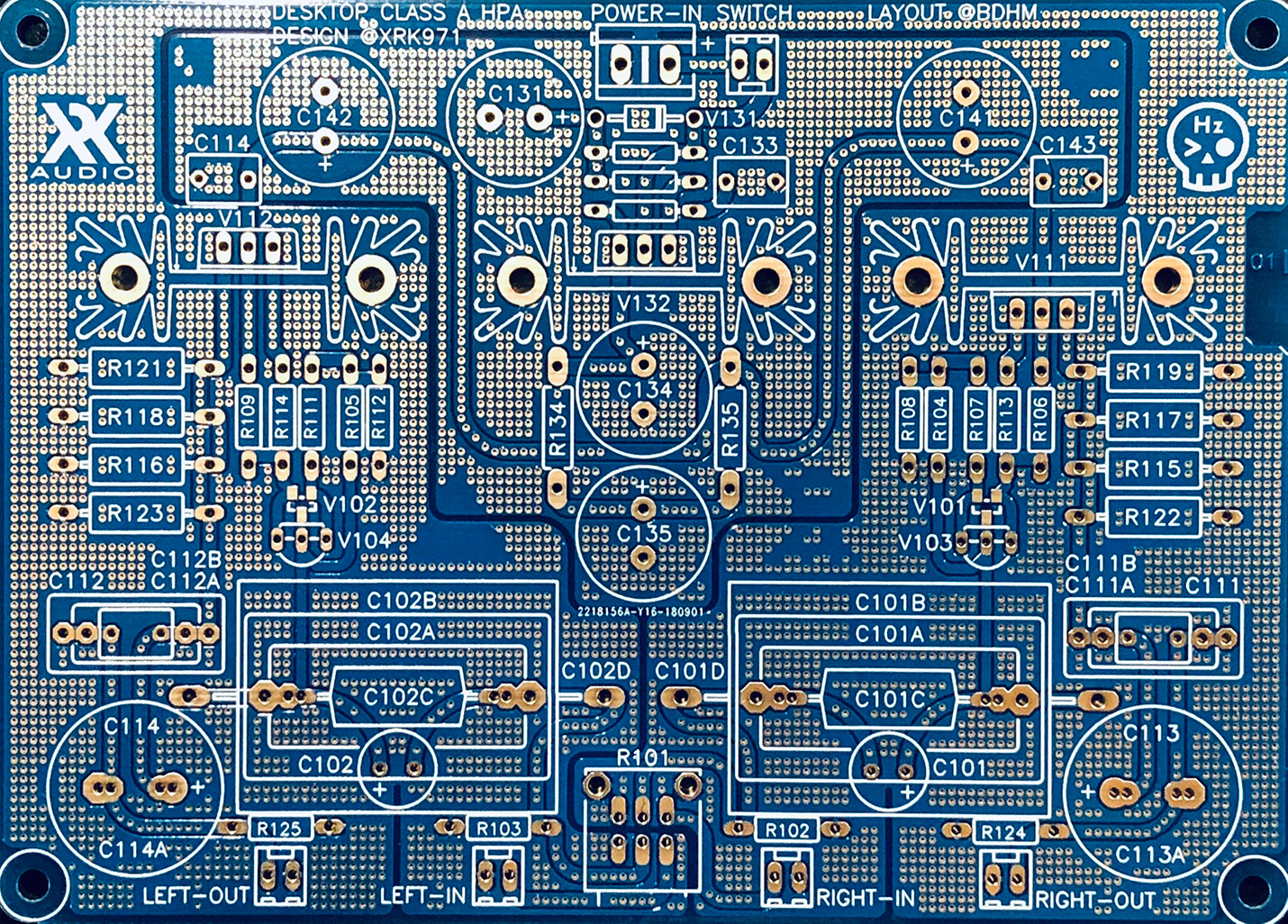

STYLE / TEXTURE IMAGE

The texture I ended up using to achieve my desired outcome for this particular piece is a blue and gold printed circuit board (PCB) used in some random electronic device that looked interesting.

In order to get the colors and texture to mesh nicely with the source image, some adjustments to color, contrast, etc., were necessary. I went through several iterations of adjustments to make the final image render just better than complete garbage.

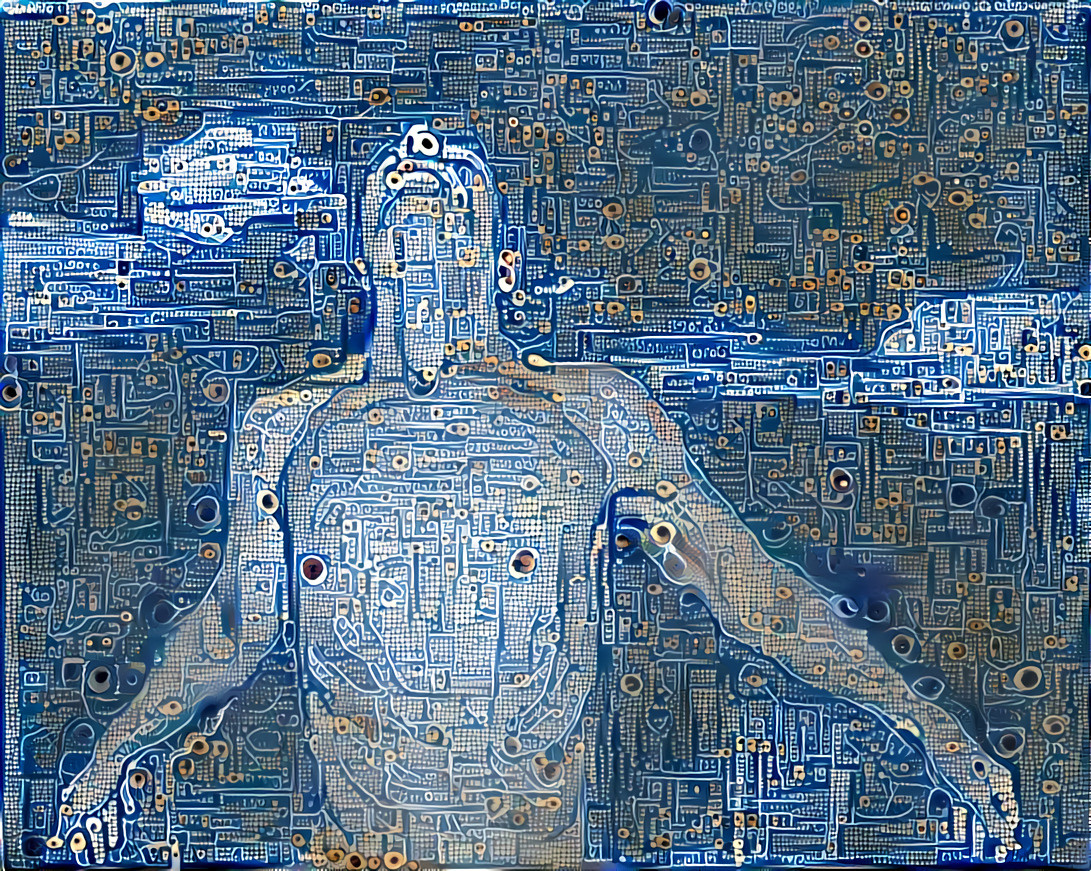

RENDERED / FINAL IMAGE

The final output (or rendered) image combines the texture to the original artwork and does some interesting things

- the edges of the subject are now defined by prominent edges of the texture image

- the darkest spots of the source image are defined by the darkest portions of the texture image

- color and fill of the rendered are determined by relative (hue, brightness, saturation, contrast, shadows, etc.) values from the texture image

Ultimately the achieved result was entirely different than what I thought, and that's the most intruiging part of this process. You really do not know what you'll get until the image is rendered.

3/4 of the images I have played around with did not work out, and I have a folder of well over 300 rendered images that are total garbage. Many folks in the community use illustrations or art with a limited color pallate for texture/style and achieve spectacular results. But since a lot of people are doing amazing things with illustrations already, and because I don't want to do what a lot of people do, Imma do me.

If deep style/deep dream has piqued your interest I have another post (with examples) in which I discuss taking this process into video. This was a daunting process but it was compelling enough to see it through.

If you're interested in manipultating single images head to deepdreamgenerator.com and see what the community is doing. It's a "free" service with a large community of creative folks. Try your hand at making something that has never before existed using two things that already exist.

EDIT: 2021-10-04 (somehow, magically two years to the day from this original post):

I've completed a music video trilogy called, "Deeper," using deep dream and deep style infused with footage thatI've amassed over the years and some stock footage as well. The three videos can be found here.